Understanding the Java Buffer Pool Memory Space

The Java buffer pool space is located outside of the garbage collector-managed memory. It’s a way to allocate native off-heap memory. What’s the benefit of using a Java buffer pool? To answer this question, let’s firstly learn what byte buffers are.

Byte Buffer

Non-Direct Buffer

java.nio package comes with the Bytebuffer class. It allows us to allocate both direct and non-direct byte buffers. Their is nothing special about non-direct byte buffers – they are an implementation of HeapByteBuffer created by ByteBuffer.allocate() and ByteBuffer.wrap() factory methods. As the name of the class suggests, these are on-heap byte buffers. Wouldn’t it be easier to allocate all the buffers on the Java heap space then? Why would anyone need to allocate something in a native memory? To answer this question, we need to understand how operating systems perform I/O operations. Any read or write instructions are executed on memory areas which are contiguous sequence of bytes. So does byte[] occupy a contiguous space on the heap? While technically it makes sense, the JVM specification does not have such guarantees. What’s more interesting, the specification doesn’t even guarantee that heap space will be contiguous itself! Although it seems to be rather unlikely that JVM will place a one-dimensional array of primitives in different places in memory, byte array from Java heap space cannot be used in native I/O operations directly. It has to be copied to a native memory before every I/O, which of course, leads to obvious inefficiencies. For this reason, a direct buffer was introduced.

Direct Buffer

A direct buffer is a chunk of native memory shared with Java from which you can perform a direct read.

An instance of DirectByteBuffer can be created using the ByteBuffer.allocateDirect() factory method. Byte buffers are the most efficient way to perform I/O operations and thus, they are used in many libraries and frameworks – for example in Netty.

Memory Mapped Buffer

A direct byte buffer may also be created by mapping a region of a file directly into memory. In other words, we can load a region of a file to a particular native memory region that can be accessed later. As you can imagine, it can give a significant performance boost if we have the requirement to read the content of a file multiple times. Thanks to memory mapped files, subsequent reads will use the content of the file from the memory, instead of loading the data from the disc every time it’s needed. MappedByteBuffer can be created via the FileChannel.map() method.

An additional advantage of memory mapped files, is that the OS can flush the buffer directly to the disk when the system is shutting down. Moreover, the OS can lock a mapped portion of the file from other processes on the machine.

Allocation is Expensive

One of the problems with direct buffers is that it’s expensive to allocate them. Regardless of the size of the buffer, calling Buffer.allocateDirect() is a relatively slow operation. It is therefore more efficient to either use direct buffers for large and long-lived buffers or create one large buffer, slice off portions on demand, and return them to be re-used when they are no longer needed. A potential problem with slicing may occur when slices are not always the same size. The initial large byte buffer can become fragmented when allocating and freeing objects of different size. Unlike Java heap, direct byte buffer cannot be compacted, because it’s not a target for the garbage collector.

Monitoring the Usage of a Java Buffer Pool

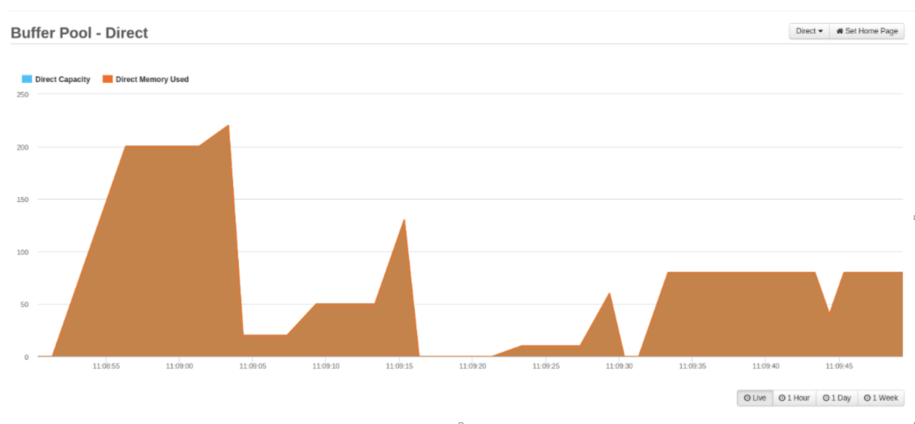

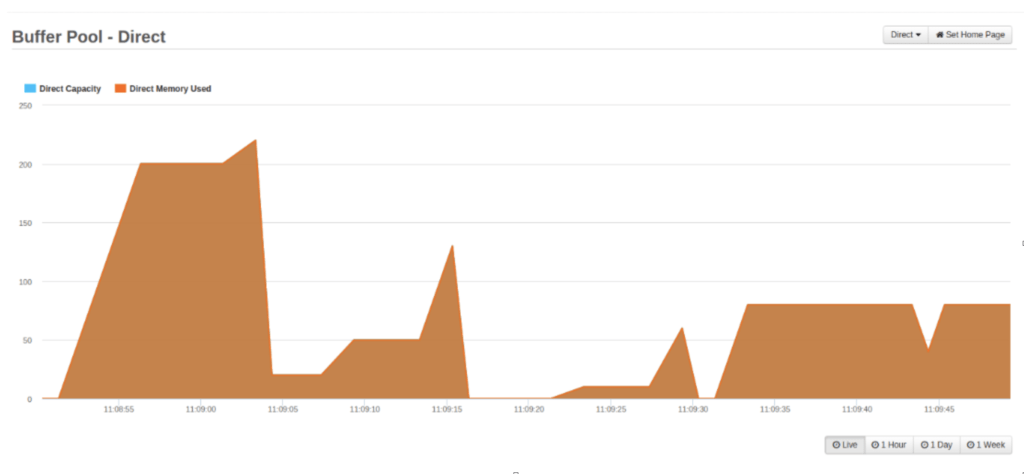

If you’re interested in the amount of direct or mapped byte buffers used by your application, then you can easily monitor them using FusionReactor. FusionReactor provides a break-down of all the different memory spaces. Simply navigate to Resources and then Direct – Buffer Pools.

By default, the Direct Buffer Pool graph is displayed. You can switch to the Mapped Buffer Pool by clicking on a drop-down in the top right corner. Java will grow those pools as required so the fact that Direct Memory Used covers Direct Capacity on the graph above, means that all buffer memory allocated so far is in use.

Please note – you can limit the amount of direct byte buffer space that an application can allocate, by using -XX:MaxDirectMemorySize=N flag. Although this is possible, you would need a very good reason to do so.