When running in a docker based environment it can be difficult to retain the logs for your application and for the monitoring tools that you use. To overcome this there are several options that you can use:

- An external platform to consume the log files.

- A docker volume to retain the files after the docker container has terminated.

There are pros and cons to each method, in our cloud solution we utilize both. Firstly we ship a lot of out logs to an external service to archive and indexing for search and alerts. We additionally produce a dynamically generated docker volume to store the logs for the application and for FusionReactor logs itself (that’s right we use FusionReactor to monitor FusionReactor!).

Background

So firstly what is a docker volume? Well to really know the ins and outs of the docker volumes I suggest that you read the official documentation from the docker guys. (https://docs.docker.com/engine/admin/volumes/volumes/).

But in case you do not want to read all of that, a docker volume is effectively a shared file system location that is not deleted when the container is destroyed. This allows you to persist the data in this volume after the docker has terminated, so you can go back and have a loot at crash dumps, log file etc.

Configuration

To configure a docker container to use a volume you only need to add the volume paths for the internal and external folder locations. On the command line that would look like this:

-v /path/on/host:/path/in/container

If you are using docker-compose you can add this to the file like this:

volumes: - /path/on/host:/path/in/container

Runtime

So if you run your container with a shared volume, how do you get the FusionReactor logs to appear in this location?

For this, there are two options. Either you can create the volumes on the location your FusionReactor logs go (and archive if using the default logger), or you can change the log directory of the FusionReactor logs instance using a small bash script.

So the first approach is to use two docker volumes, as shown here:

volumes: - /opt/docker/share/fusionreactor/tomcat/log:/opt/fusionreactor/instance/tomcat/log - /opt/docker/share/fusionreactor/tomcat/archive:/opt/fusionreactor/instance/tomcat/archive

This will put the logs from the FusionReactor inside the docker container, in the directory /opt/docker/share/fusionreactor/tomcat on the host machine. Unfortunately, this can have some side effects if you configure this for more than one FusionReactor, as all the FusionReactor instances will try to log to the same files. This can cause errors from the loggers, and more importantly, make it hard to understand the logs (as they will contain 2 instances logs).

To overcome this problem you can use a small script when the docker starts to modify the FusionReactor configuration to allow for multi-tenant logging.

Dynamic Runtime

The first step is to create a docker volume where we can place all these log files, for our system we use /opt/docker/share. So in the docker-compose file you can add:

volumes: - /opt/docker/share:/opt/docker/share

As you can see we use the same path inside the docker as on the host, now we need to create a new folder inside this path (as part of the docker run script to allow for multiple FusionReactors to log here at the same time. How we do this is to run this small snippet in the EntryPoint of the docker container.

FR_LOG_SHARE_PATH="/opt/docker/share/${HOSTNAME}" mkdir --parents ${FR_LOG_SHARE_PATH}/log mkdir --parents ${FR_LOG_SHARE_PATH}/archive echo "logdir="${FR_LOG_SHARE_PATH}/log >> /opt/fusionreactor/instance/tomcat/conf/reactor.conf echo "fac.archive.root="${FR_LOG_SHARE_PATH}/archive >> /opt/fusionreactor/instance/tomcat/conf/reactor.conf ... continue with starting your application ...

With this your FusionReactor will now log and archive to this docker volume, allowing you to access these files after the container has been terminated.

Conclusion

By using this approach you can now retain the log files for your FusionReactor instance after the container has been terminated. This approach also allows you to add additional files to these shares. For example, we also add the Java options to produce a heap dump and hs_error file to a similar docker volume in the event of a JVM Out Of Memory error or other fatal errors.

Unfortunately, this approach does have a side effect of ‘leaking’ disk space as the host directory /opt/docker/share will not be cleaned up by the docker daemon. This can easily be overcome with a small cron job or other processes to periodically delete older files.

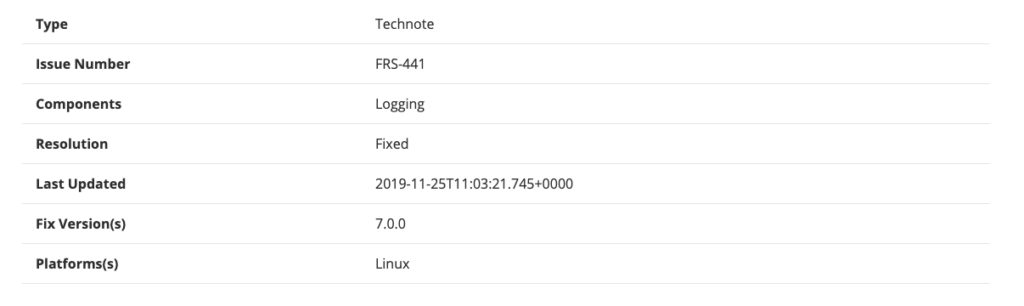

Issue Details