FusionReactor vs The Competition: Complete Observability Platform Analysis Data-driven comparison of FusionReactor against major observability platforms based on verified G2 user reviews across 10 satisfaction categories. Executive Summary We analyzed verified G2 user reviews comparing FusionReactor against seven major observability … Read More

Blog Posts

FusionReactor Vs SolarWinds

FusionReactor vs SolarWinds APM: Application Monitoring Comparison Comparing FusionReactor and SolarWinds APM platforms based on verified G2 user reviews. See how support quality, ease of use, and overall satisfaction differ between these solutions. Infrastructure Monitoring Heritage vs. Purpose-Built APM SolarWinds … Read More

FusionReactor Vs Elastic APM

FusionReactor vs Elastic APM: Application Observability Comparison Comparing FusionReactor and Elastic APM observability platforms based on verified G2 user reviews. See how support quality, ease of use, and overall satisfaction differ between these solutions. Elastic Stack Extension vs. Integrated APM … Read More

FusionReactor vs Honeycomb

FusionReactor vs Honeycomb: Observability Platforms for Modern Applications Comparing FusionReactor and Honeycomb observability platforms based on verified G2 user reviews. See how support quality, ease of use, and overall satisfaction differ between these solutions. Modern Observability: Different Philosophies Honeycomb pioneered … Read More

FusionReactor Vs Grafana

FusionReactor vs Grafana Labs: Observability Platform Comparison Comparing FusionReactor and Grafana Labs observability platforms based on verified G2 user reviews. See how support quality, ease of use, and overall satisfaction differ between these solutions. Grafana Included + Full LGTM Stack … Read More

FusionReactor Vs Sentry

FusionReactor vs Sentry: Application Monitoring Platforms Compared Comparing FusionReactor and Sentry error monitoring and observability platforms based on verified G2 user reviews. See how support quality, ease of use, and overall satisfaction stack up between these solutions. Error Monitoring vs. … Read More

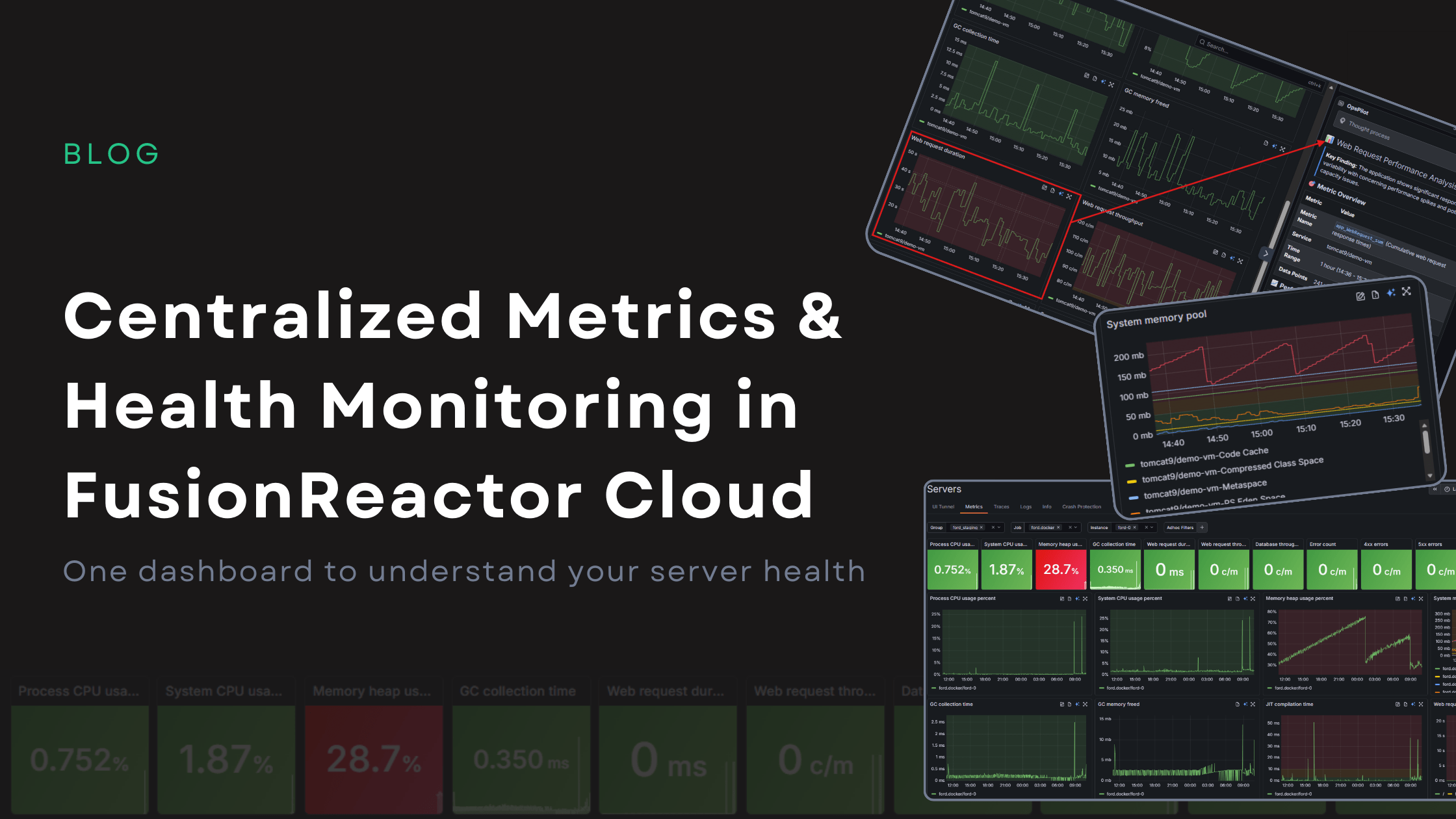

Centralized Metrics and Health Monitoring in FusionReactor Cloud

Centralized Metrics: One Dashboard for Complete Server Health in FusionReactor Cloud Metrics are only useful if they help you take action. FusionReactor Cloud’s new Centralized Metrics Viewer turns raw monitoring data into clear, meaningful visual indicators, making it easy to … Read More

FusionReactor Vs New Relic

FusionReactor vs New Relic: Observability Platform Comparison Based on Real User Reviews Comparing FusionReactor and New Relic observability platforms based on verified G2 user reviews. See how support quality, implementation speed, and ease of use stack up between these solutions. … Read More

FusionReactor Vs Splunk

FusionReactor vs Splunk: Which Observability Platform Delivers Better User Experience? Comparing FusionReactor and Splunk observability platforms based on verified G2 user reviews. See how deployment speed, support quality, and ease of use differ between these solutions. The Observability Platform Decision … Read More

FusionReactor VS Dynatrace

FusionReactor vs Dynatrace: Real User Reviews Reveal the Hidden Cost of Enterprise Observability Comparing FusionReactor and Dynatrace Observability solutions based on verified G2 user reviews. See how user satisfaction, ease of use, and support quality stack up between platforms. The … Read More

OpsPilot: AI Troubleshooting & Root Cause Analysis Built Into FusionReactor Cloud

FusionReactor Cloud offers powerful AI troubleshooting, designed not just to show you what went wrong, but also to immediately help you understand the “why” and move straight to the fix. With OpsPilot, FusionReactor’s revolutionary AI assistant, artificial intelligence is no longer … Read More

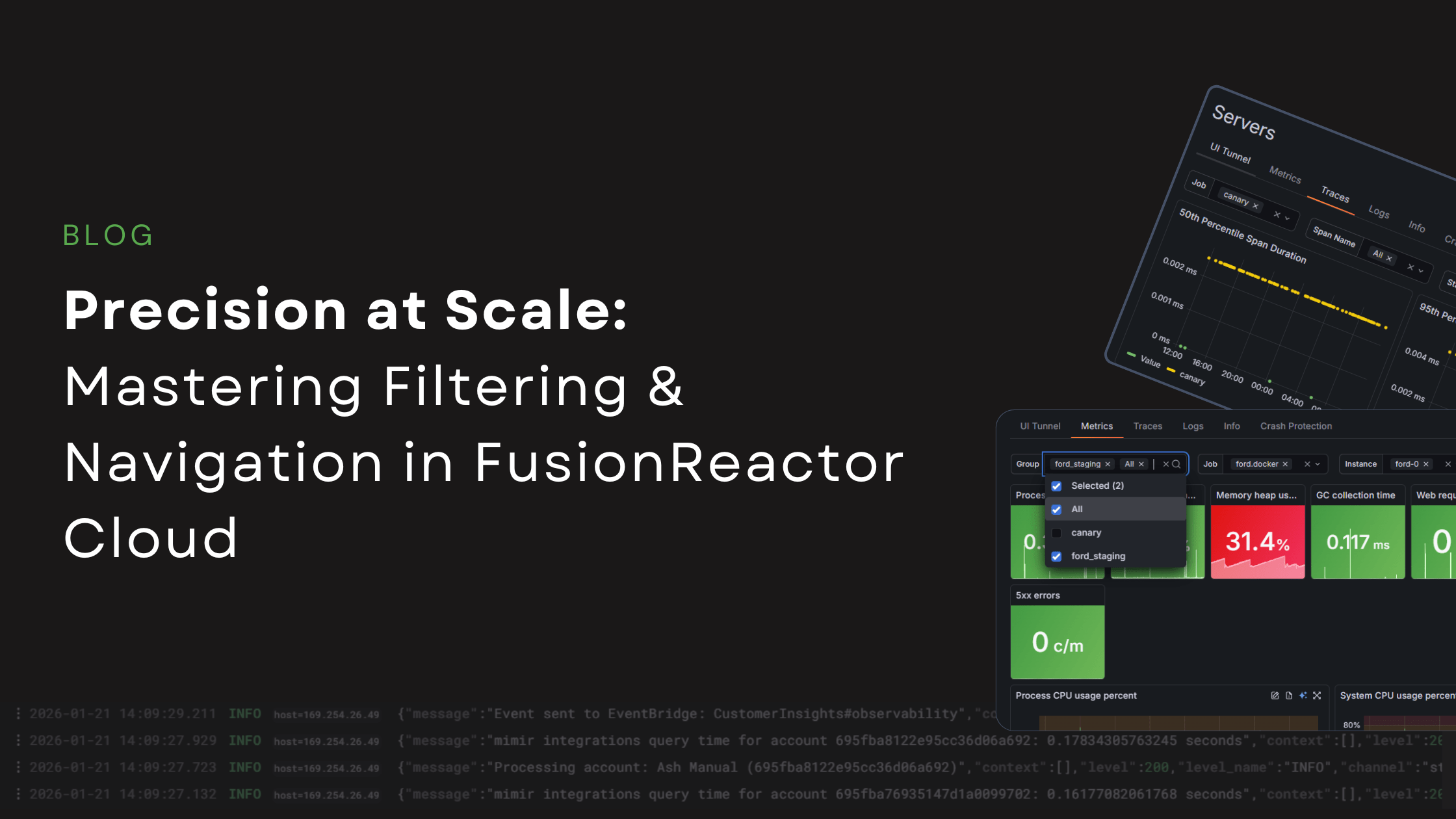

Precision at Scale: Mastering Filtering and Navigation in FusionReactor Cloud

When you are managing a handful of servers, navigation is simple. However, when your environment scales to dozens or even hundreds of instances, that simplicity collapses into chaos without the right architecture. FusionReactor Cloud’s new server experience is built from … Read More

Why OTLP Is Becoming the Universal Language of Observability

In the past, every monitoring tool (like Datadog or New Relic) had its own “language.” If you wanted to switch tools, you had to rewrite your code. OTLP is a universal language for your app’s data. It allows you to: … Read More

Two Game-Changing Features Now Available to All FusionReactor Cloud Users

We have exciting news for the FusionReactor community: as of January 12, 2026, Anomaly Detection and Custom Dashboards are now available to all FusionReactor Cloud users across every plan tier. These powerful observability capabilities, previously exclusive to higher-tier plans, are … Read More

OpenTelemetry Collector v1.49.0/v0.143.0: What’s New in January 2026

The OpenTelemetry community has released version 1.49.0/v0.143.0 of the OpenTelemetry Collector on January 5-6, 2026, bringing significant updates to the world’s most popular open-source observability framework. As OpenTelemetry continues its rapid adoption—with 48.5% of organizations already using it and another … Read More

Beyond the Three Pillars: Why Unified Telemetry is the Backbone of AI Observability

For years, the observability world has worshipped at the altar of the “Three Pillars”: Metrics, Logs, and Traces. But let’s be honest: in the heat of a production outage, these pillars often feel more like silos. You see a latency … Read More

How AI-Powered Observability Solved a Complex Microservices Mystery in Minutes

AI Observability Tool Finds Root Causes in Minutes | OpsPilot Tutorial 12 minute read How AI-Powered Observability Solved a Complex Microservices Mystery in Minutes When production breaks at 3 AM, every second counts. See how OpsPilot AI reduced troubleshooting time … Read More

FusionReactor Celebrates 20 Years: Innovation, Team Culture, and Continuous Evolution in Application Performance Monitoring

Introduction: Two Decades of Application Performance Monitoring Excellence December 2025 marks a significant milestone in the history of application performance monitoring: FusionReactor celebrates its 20th anniversary. What began in 2005 as a ColdFusion troubleshooting tool has evolved into a comprehensive … Read More

From “Black Box” to “Big Green Button”: A 20-Year Journey with FusionReactor

An Interview with David Tattersall, CEO of Intergral – makers of FusionReactor It began with a performance crisis: a high-stakes customer project running on ColdFusion, which, when it failed, gave engineers zero visibility into the cause. That challenge led co-founders … Read More

The Big Green Button:

20 Years of FusionReactor — From Survival Tool to AI-Powered Observability By Darren Pywell, CTO of FusionReactor It started with a wall. Specifically, the wall between my office and a key customer’s team. Every time their critical ColdFusion application froze, … Read More

Coming Soon to FusionReactor Cloud: Next-Gen Alerting, a Brand New Look, and More Power for Starter Plans

UPDATE (January 12, 2026): Anomaly Detection and Custom Dashboards are now available to ALL Cloud users. We are continually evolving FusionReactor Cloud to provide the deepest, most intuitive observability platform for your applications. Today, we are excited to give you … Read More

Real-Time Power Meets Historical Depth: The Observability Revolution in FusionReactor Cloud

Modern systems don’t fail on a schedule. Performance issues, restarts, and outages occur in real time, often without warning. That’s why the new Servers experience in FusionReactor Cloud gives you a potent combination: live observability seamlessly fused with deep historical … Read More

FusionReactor’s Winter 2026 G2 Awards: Record-Breaking Recognition with 105+ Badges

105+ Awards Across 10+ Categories Cement FusionReactor’s Position as Industry-Leading Observability Platform We’re thrilled to announce that FusionReactor has achieved unprecedented recognition in the G2 Winter 2026 rankings, earning more than 105 awards across multiple categories and market segments. This … Read More

From Interns to Architects: A Decade of Excellence with Ash and Mikey

FusionReactor is turning 20! It’s a massive milestone, but what makes a company last two decades isn’t just the code – it’s the people who write it, refine it, and live it. Today, we are zooming in on two team … Read More

Reimagining Server Monitoring in FusionReactor Cloud

Get ready to experience monitoring like never before! The latest features in FusionReactor Agent 2025.2 are transforming how you interact with your application data in FusionReactor Cloud. This release isn’t just an update; it’s a complete redesign of your diagnostic workflow, blending real-time … Read More

Don’t Buy an AI-Native Black Box. Choose Open Standards + Real Reasoning

The observability market is flooded with vendors racing to claim the title of “first AI observability platform.” But the label matters far less than what the AI actually delivers to engineers in production. While competitors lock you into proprietary pipelines … Read More

Beyond the Code: 20 Years of Growth with FusionReactor’s Greg Spencer

As FusionReactor celebrates its 20th anniversary, we’re taking a moment to look back at the journey through the eyes of one of its longest-serving members, Greg Spencer. Having joined back in August 1999, Greg’s tenure spans the company’s entire evolution … Read More