Basic load testing with Locust

No-one likes to get caught out with scaling issues. Your app or site goes viral, or you simply get a tonne of unexpected traffic through a favourable blog post, and without some preparation, all hell can break loose, your app fails and users are left disappointed (not least the business owners).

One of the most common ways in preparing for unexpected traffic is by using load testing. Load tests can range from blindingly simple (hit endpoint ‘x’ a number of times) to complex constructions which model user behaviour, including logins, logouts, mock purchases and the like.

Having a baseline test which you can replicate consistently – perhaps after an environment change – can help give you insight as to what capacity your setup has.

Load Testing Tools

There are a fair few load testing tools – some are web based (SaaS) offerings, where you employ a premade engine which will fire mock traffic at your app from a bank of ready to go machines – usually cloud instances; naturally, the more traffic/fake users you want to throw at your site, the more you pay. For lower level tests, the other common option is to run a script or app on your local machine to load up and fire those requests – you’ll be bound by your local CPU/RAM and network capacity, and all the traffic will come from your IP: whilst most of the time this can be fine for a low level test, when you’re trying to “outgun” a cluster with scalable resources, you’re probably going to run out of steam. Some tools support running load tests distributed over multiple machines, and can therefore be used to simulate millions of simultaneous users too.

A simple load test with Locust.io

One easy to use and open source tool is Locust (https://locust.io) – it’s python based, but the syntax is simple and easy to get your head around. It can be installed locally via `pip` – `pip install locust`, which (assuming you’ve got `pip` already) is very straightforward. Once installed you can run `locust -V` to verify your version.

Locust runs a local webserver where you can monitor your progress. To get started, all you need to do is create a local `locustfile.py` in your root directory, and run `$ locust`.

A typical locust file

```

from locust import HttpLocust, TaskSet

def index(l):

l.client.get("/", verify=False)

def task1(l):

l.client.get("/v1/environment", verify=False)

class UserBehavior(TaskSet):

tasks = {index:1, task1: 7}

class WebsiteUser(HttpLocust):

task_set = UserBehavior

min_wait = 5000

max_wait = 9000

```

This is about as simple as it gets – it will hit the site root, and then an endpoint of `/v1/environment` seven times – obviously this could be anything, and about page, or whatever.

Running The Test

Start the web interface with `$ locust –host=https://yourdomain.com` (replacing yourdomain.com as appropriate) and then open `http://localhost:8089/` – Note 127.0.0.1 isn’t bound so use localhost. You’ll be able to set the number of users to simulate, and start the “swarm”. Start with about 300 / 50.

When you’re done ctrl + C in the terminal to stop the locust process, which should give you a summary output

The Results

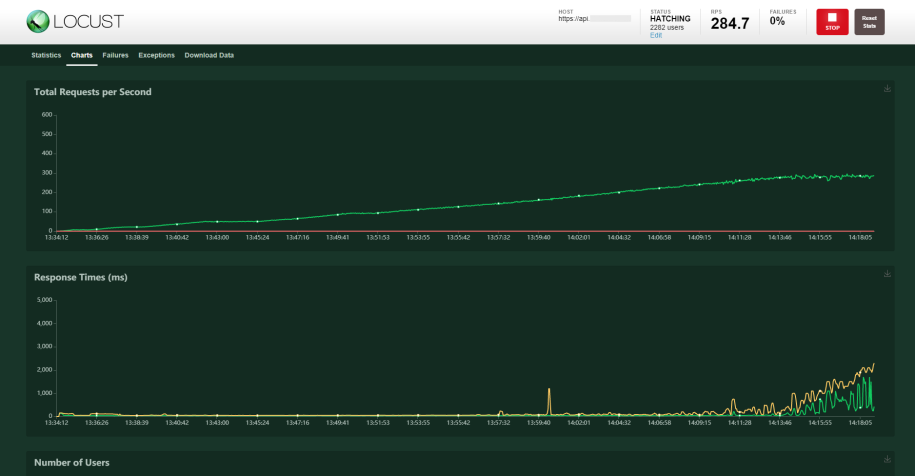

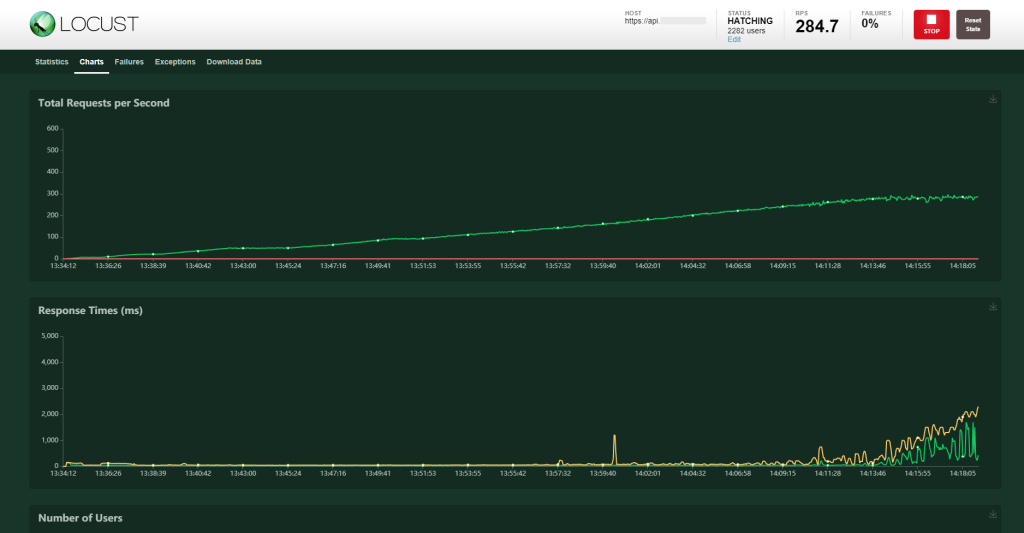

The graph should be fairly self explanatory; over the course of about an hour, the number of requests per minute goes up to c.285, simulating about 2,000 users, and things start to get a bit wobbly in the last 5 minutes or so.

Looking In FusionReactor

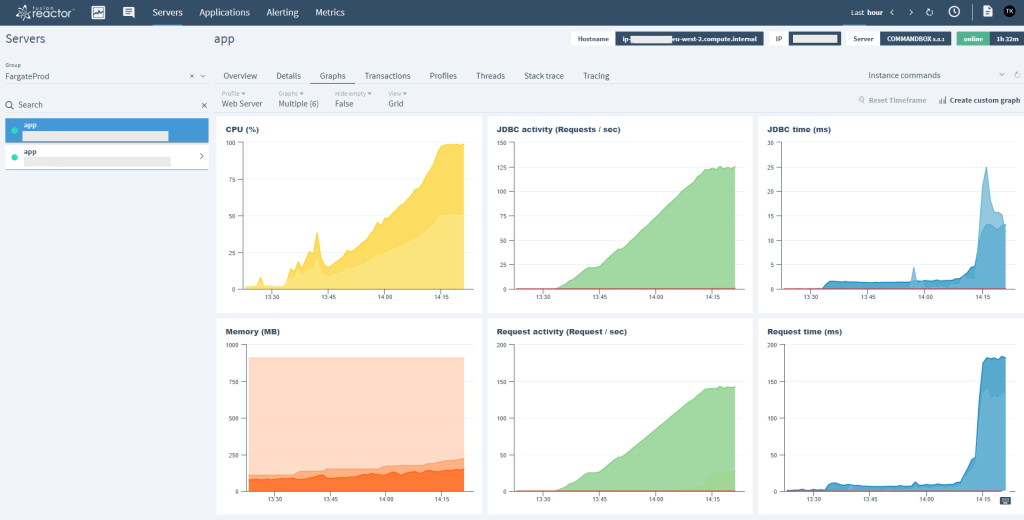

The endpoints I’m using in this test are deliberately simple – there’s no user sessions (it’s a stateless API) and only one DB call per request. This is a low powered fargate cluster running Commandbox and a CFWheels app. These containers only have 2GB Ram and 1 CPU unit.

Looking at the result in FusionReactor (cloud) we can see the two app instances/tasks – selecting one shows us (literally) half the traffic as it’s behind a A-B load balancer. The memory usage remains in check, and the CPU rises expectedly until it peaks at 100%, after which the response time goes through the roof.

Conclusion

What’s nice about this approach is we can get an idea of simple baseline behaviour when under simple load. It’s not trying to be a “complex” user, who might be doing POST requests, invoking CFHTTP calls, generating PDFs or anything taxing – it’s merely a reference point to understand the raw throughput of the setup.

A proper load test would emulate a typical users interactions for the site – some users would drop off after one hit, some would stick around, some would login etc. Building a more comprehensive test will always be application specific.