We find ourselves at a fascinating crossroads where artificial intelligence and observability are converging in unexpected ways. The recent emergence of Anthropic’s Claude Code—an agentic coding assistant currently in beta—invites us to reconsider what it means to truly “observe” our systems, our code, and perhaps even our own thought processes as developers.

The Observer Effect in Software Development

Physicists have long understood the observer effect—the theory that the mere act of observation changes the phenomenon being observed. Traditional observability in software has focused on watching systems run, collecting metrics, logs, and traces to understand behavior in production environments. But this approach maintains a clear separation between the observer and the observed.

What happens when this boundary begins to blur?

Claude Code represents something fundamentally different—not just a passive observer of our code, but an active participant that bridges the gap between human intention and machine execution. It lives in our terminal, understands our codebase holistically, and can translate natural language into direct action. This shift raises profound questions about the nature of development itself.

The Unseen Dimensions of Code

Traditional observability gives us visibility into specific metrics and behaviors, but there remain dimensions of our systems that have proven stubbornly difficult to observe:

- The historical context that led to architectural decisions

- The tacit knowledge that exists only in developers’ minds

- The complex relationships between seemingly disparate components

- The intended behavior versus the implemented reality

These “dark matter” aspects of software development have traditionally been addressed through documentation, knowledge transfer sessions, and the slow accretion of institutional knowledge. But these approaches scale poorly and deteriorate over time.

Claude Code offers a glimpse of a future where these dimensions become directly observable through conversation:

From Observability to Conversation

For decades, programming has been a fundamentally one-sided conversation. Developers issue commands, and machines execute them precisely as instructed—no more, no less. Observability tools emerged to help us understand what happens after those commands are executed.

But what if writing software could become a genuine dialogue?

When a developer asks Claude Code to “explain how the caching layer works” or “think deeply about the edge cases in our authentication flow,” something remarkable happens. The code itself becomes not just observable but interpretable. The assistant doesn’t just show you what’s happening (as traditional observability does) but helps you understand why it’s happening and what it means.

This represents a profound shift from purely empirical observation to interpretive understanding. Our relationship with code evolves from command-and-response to a collaborative conversation where both parties contribute meaning and insight.

> why was the payment service designed this way?

> what edge cases should I consider when modifying the user authentication flow?

> which parts of the codebase would be affected by changing this interface?

By turning code into a conversational artifact, AI assistants like Claude Code make the previously unobservable aspects of software directly accessible through dialogue.

The Cognitive Mirrors of Development

The most intriguing aspect of tools like Claude Code is how they serve as cognitive mirrors, reflecting our thinking processes in ways that traditional observability tools cannot.

When Claude Code is asked to “think deeply about how we should architect the new payment service,” it doesn’t just search and retrieve information—it models a deliberative process that mimics human reasoning. This externalization of thought becomes a new form of observability—not just of the code, but of the reasoning processes that shape it.

This raises fascinating questions about the nature of software development itself:

- What aspects of programming are fundamentally creative versus analytical?

- How do our mental models of code differ from the actual implementation?

- What implicit biases or assumptions influence our architectural decisions?

By making these cognitive processes more observable, AI assistants like Claude Code may help us become more reflective practitioners, more aware of our own thinking patterns and creative processes.

The Ethical Dimensions of AI-Enhanced Observability

With this new paradigm comes critical ethical considerations. Traditional observability tools watch our systems; AI assistants like Claude Code watch our code, our commands, and potentially our development patterns. This raises important questions about privacy, agency, and the changing nature of creative work.

There’s something deeply personal about the code we write—it reflects our thought processes, problem-solving approaches, and even our aesthetic sensibilities. As AI assistants become more integrated into these processes, we must consider:

- How does AI-assisted development change our relationship with our own creative output?

- What happens to tacit knowledge when it can be externalized through conversation with an AI?

- Who “owns” the insights generated through these collaborative processes?

These questions have no simple answers, but they deserve our careful consideration as we navigate this new terrain.

From Observing to Understanding to Creating

The evolution from traditional observability to AI-assisted development suggests a broader trajectory—from passive observation to active understanding to collaborative creation.

Traditional observability tools help us see what’s happening. AI assistants like Claude Code help us understand why it’s happening. The future may bring tools that help us reimagine what could be happening.

This progression points toward a future where the boundaries between human and machine contributions become increasingly fluid. Rather than simply observing our systems, we might enter into genuine creative partnerships with AI—partnerships that enhance both human creativity and machine capability.

The Road Ahead: Symbiotic Development

As we look to the horizon, it’s worth considering what might be possible when human creativity and AI capabilities merge more seamlessly in the development process:

- Systems that continuously evolve based on conversational feedback

- Development environments that adapt to individual thinking styles and workflows

- Code that becomes increasingly self-documenting and self-explaining

- Creative exploration of solution spaces is too complex for humans to navigate alone

These possibilities suggest a future where observability transforms from a technical practice focused on system monitoring to a fundamental aspect of how we create, understand, and evolve software—a continuous dialogue between human intention and machine implementation.

The Symbiosis of Traditional and AI-Enhanced Observability

Where do established observability platforms like FusionReactor fit into this emerging landscape? Far from being rendered obsolete, these specialized tools become even more valuable when paired with AI coding agents, creating a symbiotic relationship that bridges development and production environments.

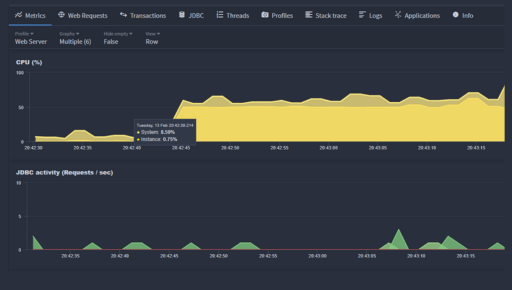

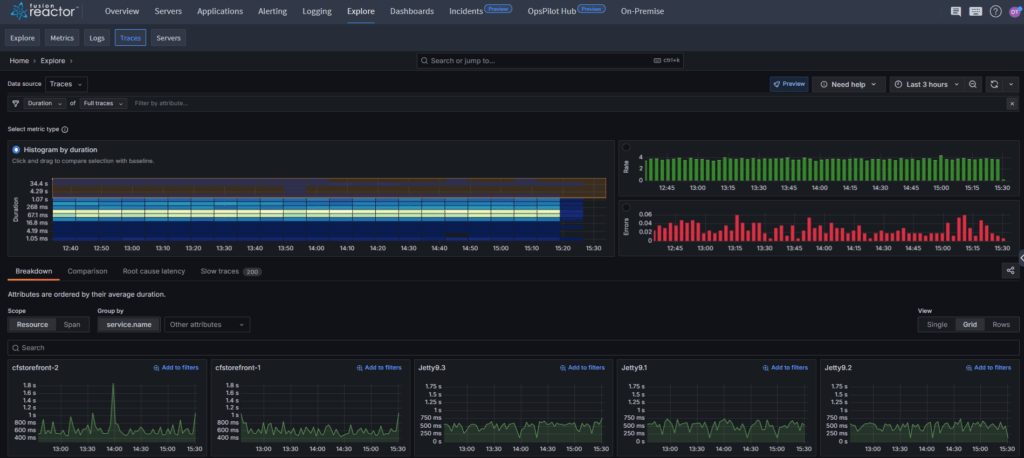

FusionReactor and similar mature observability platforms excel at what they were designed to do: providing deep, real-time insights into application performance, pinpointing bottlenecks, tracking transactions across distributed systems, and alerting teams to anomalies. These tools offer unparalleled visibility into the runtime behavior of applications—the “what is happening” in production environments.

What’s fascinating is how AI coding agents can form a complementary feedback loop with these traditional observability platforms:

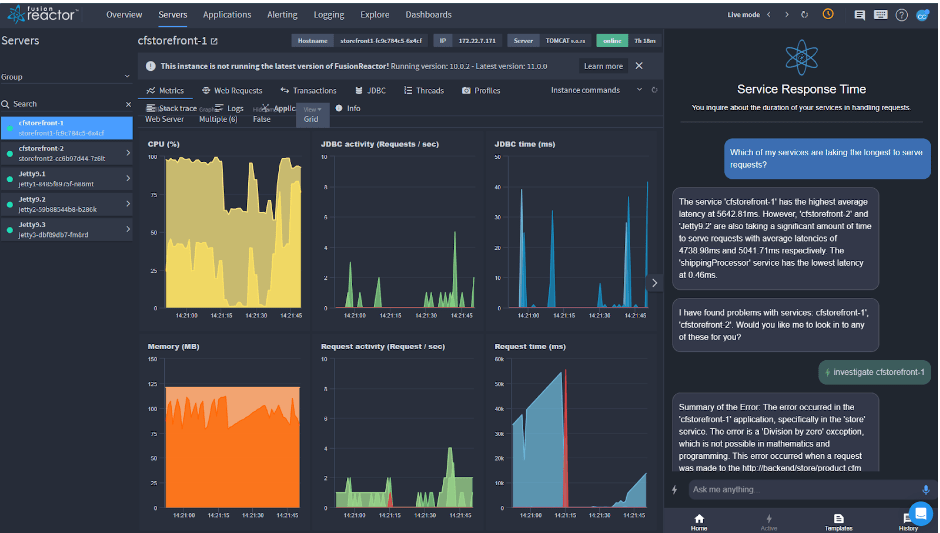

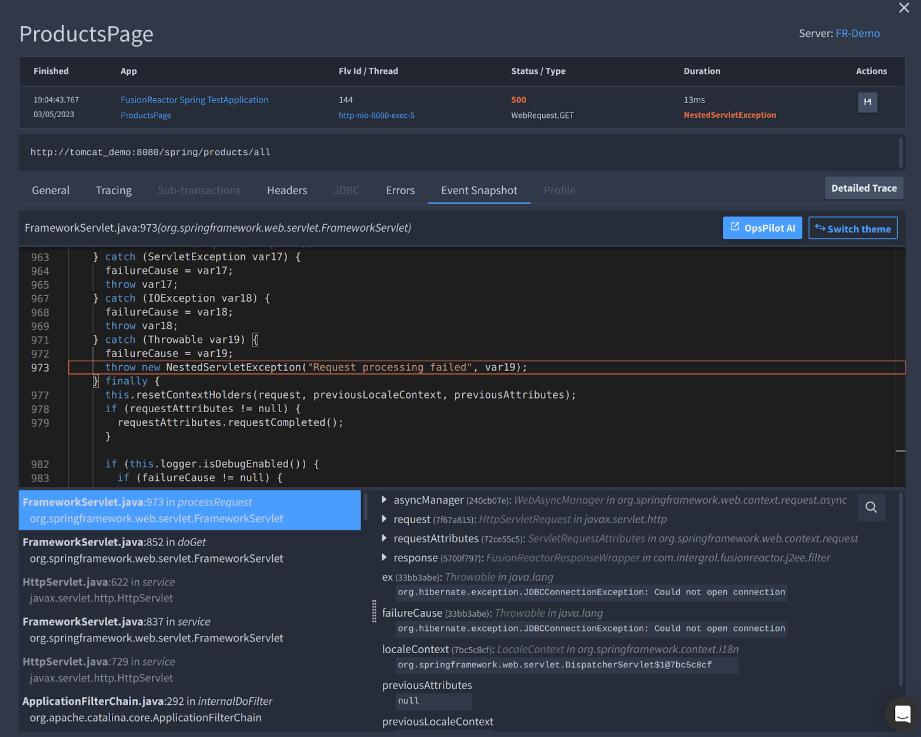

- Runtime Insights Informing Development Decisions: When FusionReactor identifies performance bottlenecks or unusual patterns in production, Claude Code can help developers understand the underlying code structures causing these issues. “Explain why our transaction times spike during these specific database operations” becomes a query that bridges production metrics and development understanding.

- Preventative Observability: While FusionReactor excels at detecting issues in running systems, Code agents can help prevent them from occurring in the first place. By asking “Will this code change impact our memory usage patterns?” developers can anticipate potential issues before deployment.

- Contextual Interpretation of Metrics: Raw metrics from observability platforms gain more profound meaning when interpreted through the lens of codebase knowledge. “FusionReactor shows increased latency in our payment processing—which recent code changes might be responsible?” creates a powerful connection between observed behavior and implementation details.

- Closing the Feedback Loop: Perhaps most powerfully, this pairing creates a tighter feedback loop between production observations and development actions. When FusionReactor identifies an issue through its sophisticated monitoring, Agents such as Claude Code can help translate that finding into specific, actionable code changes.

Rather than competing paradigms, traditional observability platforms and AI coding agents represent different ends of a continuous spectrum—from runtime behavior to development intent, from empirical measurement to interpretive understanding. Together, they form a comprehensive observability ecosystem that spans the entire software lifecycle.

This partnership suggests a future where the boundaries between development and operations continue to dissolve—where insights flow seamlessly between running systems and evolving codebases, creating more resilient, adaptable software through continuous feedback and understanding.

Conclusion: Observability as Dialogue

Claude Code and similar AI assistants invite us to reimagine observability not as a one-way process of gathering data, but as a two-way conversation that deepens our understanding of complex systems. This shift from monologue to dialogue represents a fundamental evolution in how we relate to the systems we build.

The most powerful aspect of this evolution may not be the technical capabilities these tools provide, but how they change our relationship with code itself, transforming it from something we create and observe into something we converse with and learn from in a continuous cycle of creation and discovery.

As these technologies mature, the line between observer and observed, creator and creation, will continue to blur. In this emerging landscape, observability becomes less about watching systems and more about engaging in meaningful dialogue with them—a conversation that enhances both human understanding and machine capability.

Perhaps the true potential of tools like Claude Code lies not just in making our systems more observable but in making our own creative processes more accessible, collaborative, and ultimately more human.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.