Scaling Distributed Tracing in High-Volume Cloud-Native Applications

The Challenge of Modern Observability

As organizations transition to cloud-native architectures, the complexity of debugging and monitoring distributed systems has grown exponentially. Microservices, serverless functions, and containerized applications create intricate webs of dependencies that traditional monitoring approaches struggle to untangle. Distributed tracing has emerged as a crucial observability tool, but scaling it effectively presents unique challenges.

Understanding the Scalability Bottlenecks

Data Volume Management

High-traffic applications can generate millions of traces per minute, creating significant storage and processing overhead. Each trace contains detailed information about request paths, latencies, and service dependencies. Without proper management, this data volume can quickly overwhelm both storage systems and analysis tools.

Sampling Strategies for High-Volume Traffic

Implementing intelligent sampling becomes crucial when dealing with high-volume applications. While capturing every transaction provides complete visibility, it’s often impractical and unnecessary. We’ll explore implementing effective sampling strategies that maintain visibility while reducing overhead.

Best Practices for Scaling Distributed Tracing

1. Implement Head-Based Sampling

Head-based sampling makes sampling decisions at the entry point of your system. This approach:

- Reduces the overall processing overhead

- Ensures consistent sampling across entire request paths

- Maintains end-to-end visibility for sampled requests

2. Utilize Tail-Based Sampling

Tail-based sampling evaluates traces after they are complete, enabling more sophisticated sampling decisions based on:

- Error conditions

- Latency thresholds

- Business value

- Request patterns

3. Optimize Trace Data

Minimize trace payload size by:

- Carefully selecting relevant attributes

- Implementing data compression

- Using efficient serialization formats

- Filtering out unnecessary span attributes

4. Leverage Cloud-Native Storage Solutions

Modern distributed tracing requires storage solutions that can handle:

- High write throughput

- Efficient querying capabilities

- Cost-effective long-term storage

- Flexible data retention policies

Advanced Scaling Techniques

Implementing Buffer Pools

Buffer pools help manage memory usage and reduce garbage collection overhead by:

- Pre-allocating memory for trace data

- Recycling memory buffers

- Implementing backpressure mechanisms

Distributed Trace Processing

Scale trace processing horizontally by:

- Implementing trace aggregation pipelines

- Using stream processing frameworks

- Leveraging cloud-native messaging systems

Monitoring and Maintaining Your Tracing Infrastructure

Key Metrics to Watch

Monitor your tracing infrastructure by tracking:

- Trace ingestion rate

- Storage utilization

- Query performance

- Sampling rates

- Error rates

Cost Optimization

Control costs while maintaining effectiveness by:

- Implementing tiered storage strategies

- Optimizing sampling rates

- Managing data retention policies

- Monitoring resource utilization

Future Trends in Distributed Tracing

OpenTelemetry Integration

The OpenTelemetry project is standardizing observability data collection and transmission. Future-proof your tracing infrastructure by:

- Adopting OpenTelemetry standards

- Implementing vendor-agnostic instrumentation

- Contributing to the open-source ecosystem

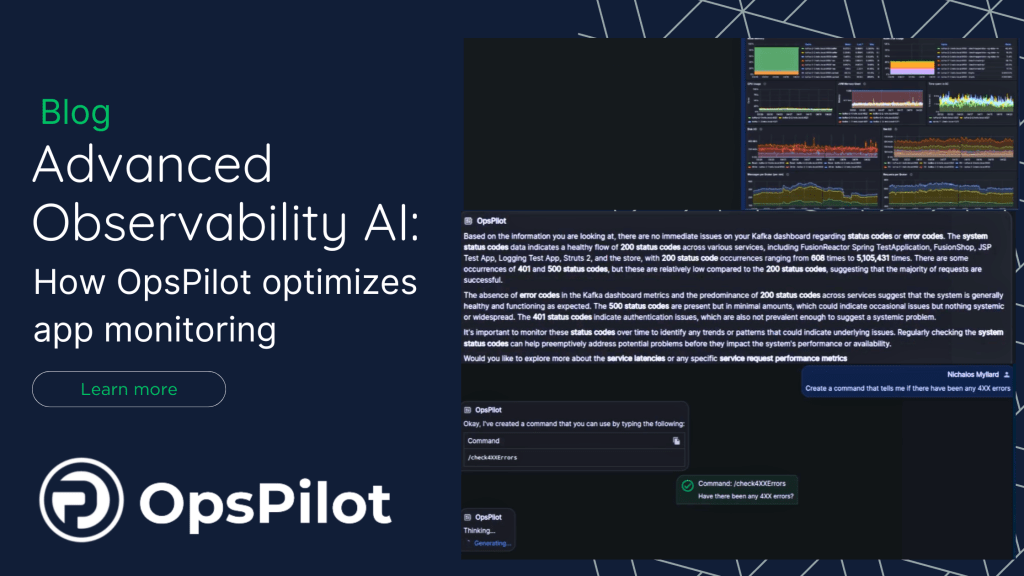

AI-Powered Trace Analysis

Machine learning is revolutionizing trace analysis through:

- Automated anomaly detection

- Pattern recognition

- Predictive performance optimization

- Root cause analysis

Conclusion

Scaling distributed tracing requires a thoughtful approach to data management, storage, and processing. Organizations can maintain comprehensive observability while managing costs and complexity by implementing appropriate sampling strategies, optimizing trace data, and leveraging modern cloud-native technologies.

The future of distributed tracing lies in standardization through OpenTelemetry and advanced analysis capabilities powered by artificial intelligence. Organizations that invest in scalable tracing infrastructure today will be better positioned to handle tomorrow’s observability challenges.